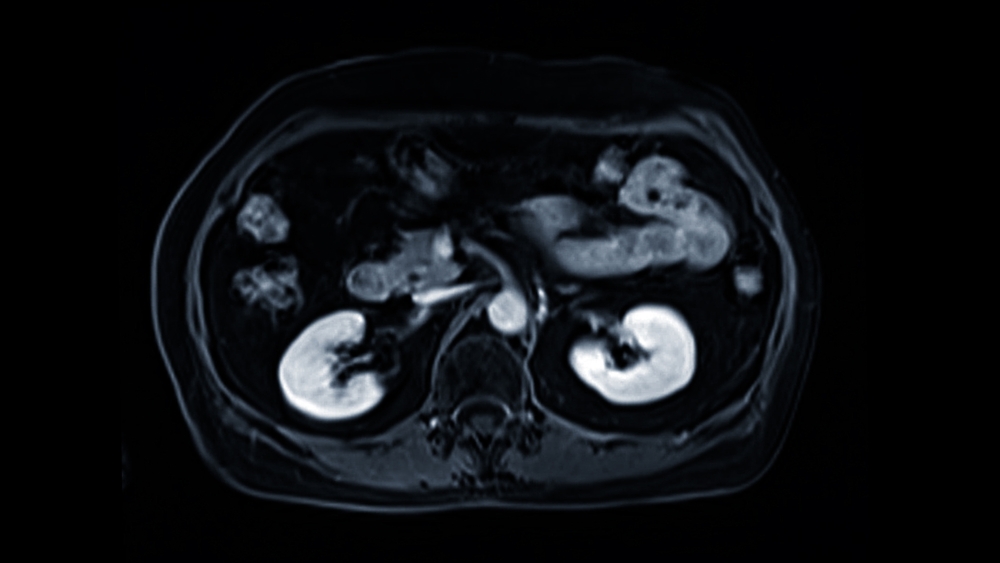

In medical imaging, particularly for prostate MRI, how we teach a computer to “see” is everything. The goal is to classify suspicious lesions accurately, separating clinically significant cancer from benign tissue. At the heart of this process are “features”—numerical descriptions that capture the essence of what an image shows. Two major approaches dominate this field: handcrafted radiomic features and deep features. Handcrafted features rely on predefined statistical or geometric descriptors that a human expert designs. In contrast, deep features are automatically learned by complex neural networks, which discover patterns on their own.

Both paradigms are central to modern prostate MRI lesion classification and radiomics research. Understanding their strengths and weaknesses is key to building trustworthy and effective AI tools for cancer detection. This comparison of radiomic vs deep learning features sheds light on how each method contributes to accuracy, interpretability, and real-world clinical use.

Understanding Features in MRI-Based Classification

Before comparing handcrafted and deep learning approaches, it’s important to clarify what “features” are and why they are so fundamental to building effective AI models for medical diagnosis.

What are “features” in radiomics and AI?

In the context of radiomics and AI, a feature is a specific, measurable property or characteristic of an image. Think of them as numerical fingerprints that describe what’s happening inside a region of interest, such as a suspected prostate lesion. These features can quantify various aspects, including the brightness of pixels, the shape of a lesion, or the texture of the tissue.

For example, a feature could be a number representing the average signal intensity within a lesion on a T2-weighted image. Another could describe how rough or smooth the lesion’s texture appears on an ADC map. By extracting dozens or even thousands of these numerical descriptors, we convert a complex medical image into a structured dataset that a machine learning model can analyze to find patterns associated with cancer.

Why feature representation matters in prostate cancer detection

The quality of these features directly impacts the accuracy, reliability, and usefulness of any lesion classification model. If the features fail to capture the subtle differences between cancerous and non-cancerous tissue, the model built upon them will inevitably perform poorly. Poor feature representation can lead to missed diagnoses or unnecessary biopsies.

Excellent features, on the other hand, provide a clear and distinct signal for the model to learn from. They highlight the specific visual cues that an expert radiologist might look for, but they do so with quantitative precision and consistency. High-quality feature representation is the foundation for a model that can generalize across different patients, MRI scanners, and clinical settings, making it a trustworthy tool for enhancing diagnostic confidence. This is why the debate over deep features prostate MRI and handcrafted features prostate cancer is so critical.

Handcrafted Radiomic Features

For many years, handcrafted radiomic features were the standard for quantitative image analysis. This approach involves experts defining a set of mathematical formulas to extract specific, pre-determined characteristics from an image.

Definition and feature categories

Handcrafted features are explicitly designed by researchers and engineers to quantify aspects of an image that are believed to have clinical relevance. They are typically grouped into three main categories:

- First-Order (Intensity-Based) Features: These describe the distribution of pixel or voxel intensities within a region of interest, without considering their spatial relationship. Examples include mean, median, standard deviation, skewness, and kurtosis of the signal intensity. They give a general sense of the lesion’s brightness and contrast.

- Shape-Based Features: These features quantify the geometry of the lesion. They describe its size, shape, and surface characteristics. Examples include volume, surface area, sphericity (how round it is), and compactness. These can help differentiate between well-defined, regular shapes and more irregular, spiculated ones that are often associated with aggressive tumors.

- Texture-Based Features: These are more advanced features that analyze the spatial relationships between pixels or voxels. They describe patterns like roughness, smoothness, and heterogeneity within the lesion. Common texture analysis methods include Gray-Level Co-occurrence Matrix (GLCM), Gray-Level Run-Length Matrix (GLRLM), and wavelet transforms. They can capture subtle tissue patterns that are not visible to the naked eye.

Strengths of handcrafted features

Handcrafted features offer several distinct advantages that have made them valuable in clinical research:

- Easy to interpret and visualize: Because each feature is defined by a specific formula (e.g., “sphericity”), its meaning is clear. A radiologist can easily understand that a low sphericity value corresponds to an irregularly shaped lesion, which aligns with their existing medical knowledge.

- Aligned with radiological understanding: Many handcrafted features are quantitative versions of concepts radiologists already use. For instance, ADC values from diffusion-weighted imaging are a type of first-order feature. This direct link to clinical practice makes models built on these features easier to trust and validate.

- Lower data requirements: Compared to deep learning models, models using handcrafted features can often be trained effectively on smaller, more curated datasets. This is a significant advantage in medical imaging, where large, high-quality annotated datasets can be difficult and expensive to acquire.

Limitations of handcrafted approaches

Despite their strengths, handcrafted features have notable limitations. Their performance is highly dependent on the accuracy of the initial lesion segmentation; a small error in outlining the lesion can significantly alter the feature values.

Furthermore, they are limited in their ability to capture abstract, high-level patterns. A predefined formula for “roughness” may not capture all the complex textural variations that differentiate cancer subtypes. Finally, extracting hundreds of features often leads to redundancy, where many features provide similar information, requiring complex feature selection steps to build a robust model.

Deep Features in MRI-Based Lesion Classification

As an alternative to manually designing features, deep learning offers a powerful, automated approach. Here, the model learns to identify the most important features directly from the image data itself.

What are deep features?

Deep features are hierarchical representations of an image that are automatically learned by a deep neural network, such as a Convolutional Neural Network (CNN) or a Vision Transformer. Instead of being programmed by a human, these features are discovered by the model as it trains on a large dataset of images. The “deep” in deep features refers to the multiple layers of processing within the network, which build increasingly complex and abstract representations of the input data.

How deep learning extracts image features

CNNs, the most common architecture for image analysis, use a series of filters (or kernels) to scan an image. In the initial layers, these filters learn to detect simple, low-level features like edges, corners, and color gradients.

As the data passes through deeper layers of the network, the outputs from the previous layers are combined to form more complex features. For example, a combination of edge detectors might learn to identify textures or simple shapes. In the final layers, the network can represent high-level semantic concepts, such as the overall structure of the prostate gland or patterns indicative of a clinically significant lesion. This process allows the model to learn a rich, multi-level feature hierarchy without any human guidance on what to look for.

Strengths of deep features

The automated nature of deep feature extraction provides several key benefits:

- Capture complex spatial and contextual information: Deep learning models excel at identifying intricate, non-linear patterns and spatial relationships that are nearly impossible to define with handcrafted formulas. They can consider the context of a lesion within the broader anatomy.

- Robust to noise and scanner variability: When trained on large and diverse datasets, deep learning models can become robust to variations in image quality, noise levels, and differences between MRI scanners from various manufacturers. They learn to focus on the underlying biological signals rather than superficial imaging artifacts.

- Can outperform traditional methods on large datasets: With sufficient training data, deep feature-based models often achieve higher accuracy in classification tasks compared to models based on handcrafted features. Their ability to learn from vast amounts of information enables them to uncover more predictive patterns.

Limitations and challenges

The power of deep features comes with its own set of challenges:

- Require large annotated datasets: The primary bottleneck for deep learning is the need for massive amounts of high-quality, expertly annotated data. Creating these datasets is time-consuming and expensive.

- Less interpretable (“black box” issue): The features learned by a deep network are complex mathematical constructs that are not easily understood by humans. This “black box” nature can make it difficult for clinicians to trust a model’s prediction, as it’s not always clear why the model made a particular decision.

- High computational cost: Training deep learning models requires significant computational power, including specialized hardware like GPUs, and can take days or even weeks to complete.

Comparing Deep and Handcrafted Features

The choice between handcrafted and deep features involves a trade-off between performance, interpretability, and data requirements. Each has a role to play in the development of AI for prostate cancer detection.

Accuracy and generalization

In head-to-head comparisons, deep features often outperform handcrafted features in terms of raw accuracy, especially when large and diverse datasets are available for training. Because deep learning models learn the optimal features for a specific task, they can uncover more predictive patterns than a predefined set of radiomic features. This often leads to better generalization, meaning the model performs well on new, unseen data from different hospitals or scanners. However, on smaller datasets, handcrafted features can sometimes be more robust and less prone to overfitting.

Interpretability and clinical trust

This is where handcrafted features have a clear advantage. A model that predicts cancer risk based on “lesion sphericity” and “ADC mean” is intuitively understandable to a radiologist. In contrast, a deep learning model’s decision is based on the activation of thousands of abstract neurons. This lack of transparency can be a barrier to clinical adoption. To address this “black box” problem, researchers are developing explainability tools (like saliency maps) that highlight which parts of an image a deep learning model focused on, providing a visual rationale for its prediction.

Combining both approaches — hybrid models

Recognizing the complementary strengths of both methods, a growing area of research focuses on hybrid models. These models combine handcrafted radiomic features with deep features to leverage the best of both worlds. For instance, handcrafted features can be fed into a neural network alongside the raw image data. Research has shown that these hybrid approaches can improve classification performance by providing the model with both interpretable, expert-defined information and complex, data-driven patterns.

Technical and Computational Considerations

Beyond performance and interpretability, practical considerations like data availability, feature selection, and reproducibility play a crucial role in deciding which feature extraction method to use.

Data requirements and preprocessing

The scale of data required differs significantly. Handcrafted radiomics can yield meaningful results from datasets with a few hundred patients, as the features are explicitly defined. Deep learning models, however, are data-hungry. They typically require thousands of annotated examples to learn robust and generalizable features, and their performance scales with the size and diversity of the training data. Preprocessing steps, such as image normalization and co-registration, are critical for both approaches but are especially important for ensuring consistency in large-scale deep learning projects.

Feature selection and dimensionality reduction

Both methods can generate a large number of features, which increases the risk of model overfitting (learning noise instead of signal). With handcrafted features, this is addressed through statistical feature selection methods that identify and remove redundant or irrelevant features. For deep models, this is implicitly handled by the network’s architecture and regularization techniques (like dropout), which prevent the model from relying too heavily on any single feature.

Reproducibility and harmonization

A major challenge in MRI-based radiomics is feature stability. The values of both handcrafted and deep features can vary depending on the MRI scanner manufacturer, acquisition protocols, and reconstruction parameters. This lack of reproducibility can undermine a model’s reliability. Significant research is focused on developing harmonization techniques to standardize feature values across different sites, ensuring that an AI model produces consistent results regardless of where the MRI was performed.

Clinical Implications and Research Applications

Ultimately, the choice of feature type depends on the specific clinical goal, the available resources, and the stage of development.

When to use handcrafted features

Handcrafted features are ideal for exploratory research, especially with smaller datasets. Their interpretability makes them perfect for studies aiming to discover and validate new imaging biomarkers. For example, a study could use handcrafted features to test the hypothesis that lesion texture on ADC maps is correlated with Gleason grade. They are also well-suited for building simple, explainable AI models for specific clinical questions.

When to use deep features

Deep features are the preferred choice for developing high-performance, end-to-end AI classification systems intended for real-time clinical deployment. When trained on large, multi-center datasets, they can deliver the accuracy and robustness needed for a screening or diagnostic assistance tool. Their ability to automate the entire feature extraction process is essential for creating scalable, “zero-click” solutions that integrate seamlessly into clinical workflows.

Toward clinically deployable AI

For a product to be successfully deployed in a clinical setting, it must be accurate, reliable, and trustworthy. The most promising path forward likely involves a synthesis of both approaches. A deep learning model can provide a highly accurate initial classification, while an analysis based on handcrafted features or explainability tools can offer a “second opinion” that gives clinicians the confidence to act on the model’s recommendation.

Future Directions

The field of feature extraction for medical imaging is rapidly evolving, with several exciting trends on the horizon.

Deep radiomics and self-supervised learning

New techniques are emerging where deep networks are trained to predict or generate handcrafted-style radiomic features. This “deep radiomics” approach aims to combine the descriptive power of radiomics with the automated learning of deep models. Additionally, self-supervised learning allows models to learn powerful features from large amounts of unlabeled data, reducing the reliance on manual annotation.

Federated learning and data sharing

To overcome data scarcity, federated learning allows multiple institutions to collaboratively train a single deep learning model without ever sharing their sensitive patient data. Each institution trains the model on its local data, and only the model updates—not the data itself—are shared. This approach enables the development of more robust and generalizable models while preserving patient privacy.

Explainable hybrid systems

The future likely belongs to hybrid systems that are both highly accurate and fully interpretable. These models might use a deep learning backbone for feature extraction but present their findings to the clinician in the form of familiar, radiomic-style descriptors and clear visual explanations. This will help bridge the gap between the “black box” of AI and the practical needs of clinical decision-making.

Conclusion

The debate between handcrafted and deep features is not about choosing one superior method but about understanding the right tool for the job. Handcrafted features bring interpretability, align with clinical knowledge, and provide a trusted foundation for quantitative analysis. Deep features offer unparalleled automation, performance at scale, and the ability to discover complex patterns beyond human definition.

For the next generation of AI-powered prostate MRI systems, the most effective path forward is one that combines the strengths of both. By integrating the clinical trust of handcrafted radiomics with the raw power and automation of deep learning, we can build more accurate, reliable, and ultimately more useful tools. This synthesis forms the foundation for AI that can truly transform prostate cancer detection, helping clinicians make faster, more confident decisions and improving outcomes for patients everywhere.