Blog

Domain Shift in Prostate MRI AI: Differences in Equipment, Protocols, and Vendors

Artificial intelligence in prostate MRI is only as strong as the data it learns from. An AI model can learn to detect cancerous lesions with remarkable accuracy, but what happens when the real world looks different from the training data? When the same prostate lesion appears different depending on the scanner, vendor, or imaging protocol, the model’s performance can drop dramatically. This phenomenon is known as domain shift, and it is one of the most significant challenges in deploying medical AI. This article explores how domain shift affects model accuracy and generalizability, and what strategies researchers are using to overcome it.

What Is Domain Shift in Medical Imaging AI?

At its core, domain shift is a mismatch between the data an AI model was trained on and the data it encounters in a new clinical setting. This mismatch can undermine the model’s reliability and lead to unexpected errors, making it a critical consideration for any AI tool intended for widespread clinical use.

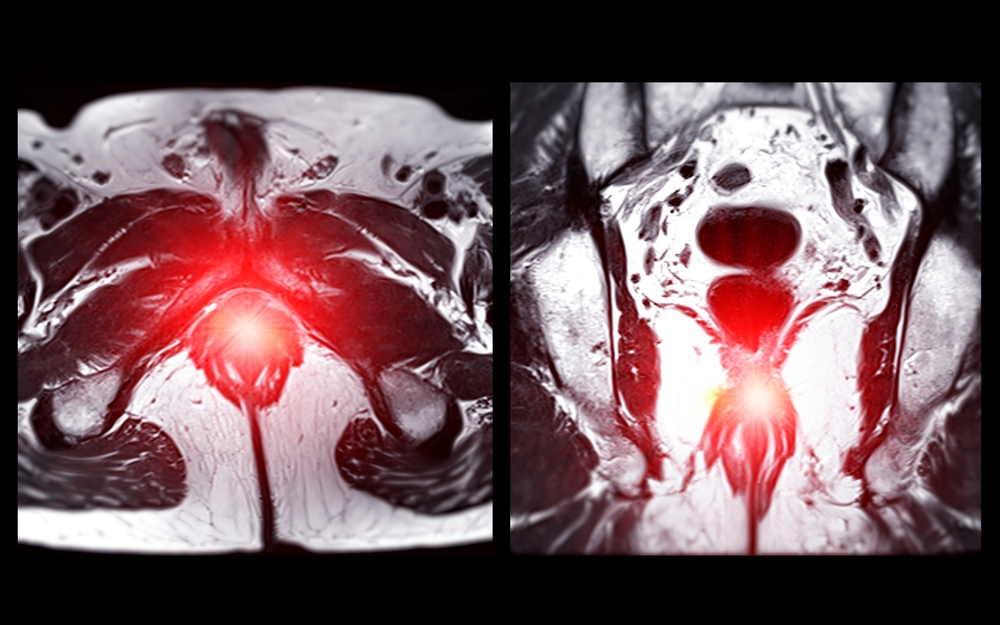

Defining domain shift in prostate MRI

In the context of prostate MRI, domain shift occurs when the statistical distribution of image data changes between different environments. For example, an AI model might be trained exclusively on images from a single hospital’s 3T Siemens scanner. The “training domain” is this specific dataset. When that same model is deployed at another hospital that uses a 1.5T GE scanner, it is now operating in a new “deployment domain.” The images from the GE scanner will have different characteristics—like noise levels, contrast, and resolution—creating a domain shift that the model was never prepared for.

Why domain shift is a problem for AI models

AI models learn to recognize patterns based on the specific data they see during training. They become highly attuned to the nuances of that dataset. When a domain shift occurs, even small, seemingly insignificant differences can confuse the model. A subtle change in image contrast might cause the model to misinterpret healthy tissue as a suspicious lesion, or worse, miss a cancerous lesion entirely. This drop in performance makes the AI unreliable and potentially unsafe for clinical decision-making.

Clinical vs technical sources of domain shift

Domain shifts arise from both clinical and technical factors. Clinical sources include variations in patient populations, such as differences in age, disease prevalence, or genetics across geographic regions. However, the most common drivers are technical. These include differences in MRI scanner hardware, vendor-specific image processing software, and variations in how the imaging scans (protocols) are performed. Each of these technical factors can alter an image’s appearance, creating a new domain for the AI to navigate.

How MRI Equipment and Vendors Create Data Variability

The hardware and software that create MRI images are a primary source of domain shift. Different manufacturers build their systems in unique ways, leading to inherent variability in the images they produce.

Hardware differences between MRI scanners

Not all MRI scanners are created equal. Key hardware differences that impact image quality include:

- Magnetic Field Strength: Scans from a 1.5 Tesla (1.5T) magnet have a different signal-to-noise ratio (SNR) and tissue contrast compared to scans from a 3T magnet. 3T scanners generally produce sharper images but can also introduce different types of artifacts.

- Coil Design: The radiofrequency coils used to receive the signal from the patient’s body vary in design and sensitivity, affecting image uniformity and clarity.

- Gradient Performance: The gradients are responsible for spatial encoding, and their strength and speed influence image resolution and acquisition time.

Vendor-specific reconstruction algorithms

After the raw MRI data is collected, it is processed by proprietary reconstruction software to create the final image. Each major vendor—like GE, Siemens, and Philips—uses unique algorithms that influence the image’s final appearance. These algorithms control factors like noise reduction, edge enhancement, and contrast, creating a distinct “look” for each vendor. An AI model trained on Siemens images may struggle to interpret the different feature patterns present in Philips images.

Calibration and maintenance impact

Even on the same machine, images can change over time. Hardware ages, and performance can drift between maintenance cycles. Regular calibration and tuning are essential to maintain consistency, but variations in these schedules across different imaging sites can introduce subtle, time-dependent variability. An image taken in January may look slightly different from one taken in July on the same scanner.

Variability Introduced by Imaging Protocols

Beyond the hardware, the specific settings used to acquire an MRI scan—the imaging protocol—introduce another significant layer of variability. While guidelines exist, they are not always strictly followed.

Protocol differences across sites

Radiology departments often develop their own customized imaging protocols. Even minor differences in key acquisition parameters can change how tissue appears:

- TR (Repetition Time) and TE (Echo Time): These settings control tissue contrast and weighting (e.g., T1 vs. T2).

- Slice Thickness: Thicker slices can obscure small lesions, while thinner slices provide more detail but may have lower SNR.

- Field of View (FOV): The size of the imaging area can affect pixel size and resolution.

An AI model trained on images with a 3mm slice thickness may perform poorly on images acquired with a 4mm thickness.

Human-driven variability in acquisition

MRI technologists play a crucial role in image quality. Their expertise influences patient positioning, ensuring the prostate is centered correctly, and managing patient motion. Differences in technologist training and experience can lead to subtle variations in image quality and orientation from site to site, further contributing to domain shift.

The challenge of unstandardized acquisition settings

Organizations like the American College of Radiology (with PI-RADS) and the Quantitative Imaging Biomarkers Alliance (QIBA) have made great strides in promoting standardized prostate MRI protocols. However, global consensus is still lacking, and adherence to these guidelines varies. This lack of universal standardization remains a major challenge for developing AI models that can perform reliably everywhere.

The Impact of Domain Shift on Model Generalizability

Generalizability is an AI model’s ability to maintain its performance on new, unseen data from different domains. Domain shift is the primary threat to generalizability.

When AI performs well in research but fails in the clinic

This is a classic problem in medical AI. A model may achieve stellar accuracy (e.g., 95%) on the dataset from the institution where it was developed. However, when tested on data from another hospital, its accuracy might plummet to 70%. This is known as “dataset bias.” The model has essentially memorized the patterns of its home data and fails to generalize to the diversity of the real world.

Effects on feature reproducibility and radiomic signatures

Radiomics involves extracting thousands of quantitative features from medical images to create predictive signatures. However, research has shown that many of these features are not stable across different scanner models or acquisition protocols. Domain shift can dramatically alter feature distributions, making radiomic signatures unreliable and difficult to reproduce across studies.

Clinical implications of poor generalization

Poor generalization has serious consequences. It erodes clinical trust in AI tools, as radiologists and urologists cannot rely on a model that performs unpredictably. It also creates major hurdles for regulatory bodies like the FDA, which require manufacturers to prove their AI is safe and effective across the diverse range of equipment used in clinical practice. Ultimately, it slows the adoption of potentially life-saving technology.

Strategies to Overcome Domain Shift

Fortunately, researchers have developed several powerful strategies to combat domain shift and build more robust, generalizable AI models.

Data harmonization and intensity normalization

One approach is to preprocess images to make them look more alike, regardless of their source. Methods like Z-score normalization, histogram matching, and the ComBat technique (originally from genomics) can be used to align image intensity distributions and reduce scanner-specific effects before the data ever reaches the AI model.

Transfer learning and fine-tuning approaches

Instead of training a model from scratch, transfer learning starts with a model that has already been trained on a large, general dataset. This pre-trained model is then “fine-tuned” using a smaller amount of site-specific data. This allows the model to adapt its knowledge to the unique characteristics of a new clinical domain without requiring a massive local dataset.

Domain adaptation techniques

More advanced methods, known as domain adaptation, aim to train models that are inherently robust to domain shifts. Techniques like adversarial training involve using a second AI model to challenge the main model, forcing it to learn features that are scanner-invariant. Other methods, like style transfer, can even translate an image from one domain’s “style” to another.

Multi-Site Collaboration and Dataset Standardization

The most effective way to build a generalizable model is to train it on diverse data from the very beginning. This requires collaboration and standardization across institutions.

The role of multi-center data in building robust AI

By pooling data from multiple hospitals with different scanners, vendors, and protocols, researchers can create large, diverse datasets. Training an AI model on this varied data exposes it to a wide range of domain shifts from the outset, forcing it to learn the fundamental patterns of the disease rather than the quirks of a specific scanner.

Standardized imaging protocols and open data initiatives

Initiatives like PI-RADS and QIBA are crucial for promoting standardized acquisition protocols that reduce variability at the source. Furthermore, open data archives like The Cancer Imaging Archive (TCIA) provide researchers with access to multi-center datasets, fueling the development of more robust AI.

Federated learning for privacy-preserving cross-site training

Sharing patient data between institutions raises significant privacy concerns. Federated learning offers a solution. In this approach, the AI model is sent to each hospital to be trained on the local data. The updated model parameters—not the raw patient data—are then sent back to a central server and aggregated. This allows for collaborative, multi-site training that improves generalizability while protecting patient privacy.

Regulatory and Validation Considerations

Overcoming domain shift is not just a technical goal; it is a regulatory necessity for bringing medical AI to market.

How domain shift affects FDA and CE approval

Regulatory bodies like the FDA in the United States and CE-marking authorities in Europe require AI manufacturers to demonstrate that their products are safe and effective for the intended patient population and across the range of equipment used in practice. This means providing evidence of robust performance across different scanner vendors, field strengths, and sites.

Need for external validation studies

A claim of generalizability must be backed by rigorous external validation. This involves testing the AI model on independent datasets from multiple institutions that were not used during the model’s development. These trials are essential for strengthening claims of safety and efficacy.

Continuous performance monitoring in clinical deployment

The work does not end after a product is approved. Scanners evolve, and new protocols are introduced. Post-market surveillance is critical for monitoring the AI’s performance in the real world and recalibrating the model as needed to ensure it remains accurate and reliable over time.

Future Directions in Domain Generalization

The field is rapidly advancing toward AI that is truly “plug-and-play,” working reliably out of the box in any clinical environment.

Domain generalization vs domain adaptation

While domain adaptation focuses on adapting a model to a new but known domain, domain generalization aims to build a model that performs well on completely unseen domains without any fine-tuning. This is the ultimate goal for creating universally applicable AI.

Self-supervised and synthetic data approaches

Training on massive, unlabeled datasets using self-supervised learning can help models learn more fundamental and robust representations of anatomy. Additionally, generative adversarial networks (GANs) can be used to create synthetic MRI data that simulates a wide variety of scanner types and artifacts, expanding domain diversity for training.

Toward vendor-neutral and universally calibrated AI

The collective vision is to create vendor-neutral AI that performs with high reliability regardless of the scanner or protocol used. This will require a combination of all the strategies discussed: standardized protocols, diverse training data, advanced AI techniques, and rigorous, continuous validation.

Conclusion

Domain shift, driven by differences in equipment, protocols, and vendors, remains one of the greatest challenges in achieving reliable, generalizable AI for prostate MRI. A model that works perfectly in one hospital can fail in another, undermining clinical trust and slowing adoption.

However, the field is actively tackling this problem head-on. By combining smart data harmonization techniques, advanced AI methods like transfer learning, and large-scale, multi-site collaboration, we are moving steadily toward a future of vendor-neutral, clinically trustworthy prostate MRI AI. The goal is clear: to build AI that radiologists can depend on, no matter where they practice or what equipment they use.

Pioneering Cancer Detection with AI and MRI (and CT)

At Bot Image™ AI, we’re on a mission to revolutionize medical imaging through cutting-edge artificial intelligence technology.

Contact Us