Blog

Reproducibility, Repeatability, and Harmonization of Radiomic Features Across Scanners

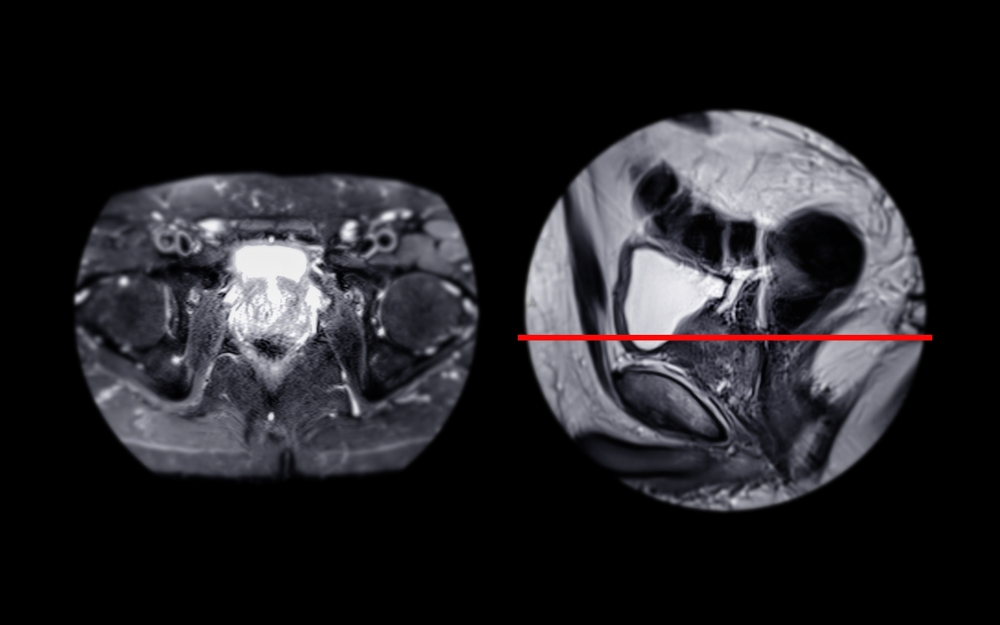

For radiomics to become a truly useful tool in clinical practice, its features must be consistent and comparable across different MRI scanners, medical sites, and imaging protocols. Think of it like a blood test—a result for a specific biomarker should mean the same thing whether it was processed in a lab in New York or a lab in California. The same principle applies to the advanced data extracted from medical images. This post explains the concepts of reproducibility, repeatability, and harmonization, which form the foundation for building reliable artificial intelligence (AI) models for prostate cancer lesion classification.

Why Reproducibility and Repeatability Matter in Radiomics

Radiomics involves extracting a large number of quantitative features from medical images, providing data that goes far beyond what the human eye can see. These features can describe a tumor’s shape, intensity, and texture. When used to train AI models, they can help predict disease characteristics, such as how aggressive a prostate cancer lesion might be. However, if the feature values change simply because the patient was scanned on a different machine, any model built on that data becomes unreliable.

Defining reproducibility vs. repeatability

While often used interchangeably, reproducibility and repeatability have distinct meanings in the context of radiomics. Understanding the difference is crucial for evaluating the quality of imaging biomarkers.

Reproducibility refers to the stability of a radiomic feature when an image is acquired under different conditions. This could mean using different MRI scanners, different vendors (e.g., Siemens, GE, Philips), or even different hospitals. High reproducibility means a feature’s value remains consistent regardless of these external variables.

Repeatability, on the other hand, describes the stability of a feature when imaging is performed multiple times on the same scanner using the exact same protocol. This measures the inherent consistency of the feature itself, isolating it from external factors. A feature with high repeatability gives you confidence that any measured change over time reflects a true biological shift, not just random noise from the imaging process.

Why consistency is critical for AI and clinical translation

Inconsistent radiomic features create unreliable AI models. A model trained on data from one hospital’s scanner might perform poorly when applied to images from another. This lack of “generalizability” is a major barrier to bringing AI tools from the research lab into routine clinical use.

For a diagnostic tool to be effective, a radiologist or urologist must trust its output. If an AI model flags a lesion as high-risk, that classification needs to be based on stable, validated data. Without reproducibility and repeatability, the model’s predictions could be misleading, potentially leading to incorrect treatment decisions. Consistent features ensure that the AI is responding to the patient’s biology, not the quirks of the hardware.

The challenge of variability in prostate MRI

Prostate MRI is particularly susceptible to variability. Unlike standardized tests, MRI scans can differ significantly based on the equipment and techniques used. Factors like hardware differences between scanner models, the type of magnetic coils used, and the field strength of the magnet (e.g., 1.5 Tesla vs. 3.0 Tesla) all influence the final image.

Furthermore, the software-based reconstruction algorithms that turn raw signal data into a viewable image are often proprietary and vary by vendor. Each of these elements can subtly alter pixel values, which in turn affects the radiomic features calculated from them. Overcoming this inherent variability is a central challenge in making radiomics a dependable clinical standard.

Factors Affecting Radiomic Feature Variability

The path from a patient on an MRI table to a set of quantitative radiomic features is complex. At each step, sources of variability can creep in, threatening the integrity of the data. Understanding these factors is the first step toward controlling them.

Scanner hardware and vendor differences

No two MRI scanners are exactly alike. Different models have unique gradient systems, which are responsible for spatial encoding of the MRI signal. They also use different radiofrequency coils and have distinct hardware architectures.

Even more significant are the differences between vendors. Each manufacturer uses proprietary image reconstruction kernels—the complex software that transforms raw data into a diagnostic image. These algorithms can enhance certain image characteristics, like sharpness or contrast, but in doing so, they can fundamentally alter the underlying texture and intensity metrics that radiomic models rely on.

MRI acquisition parameters and protocol settings

The “recipe” used to acquire an MRI scan, known as the protocol, has a profound impact on radiomic features. Key parameters include:

- TR (Repetition Time) and TE (Echo Time): These timing parameters control image contrast and are fundamental to how different tissues appear.

- Slice Thickness: Thicker slices average more tissue together, which can smooth out fine textures and reduce the accuracy of shape-based features.

- Field of View (FOV): This determines the size of the area being imaged and affects pixel resolution.

- Sequence Type: Different MRI sequences (like T2-weighted, diffusion-weighted) highlight different aspects of tissue biology, and each generates a unique set of radiomic features.

Even small adjustments to these settings can cause significant shifts in feature values, making it difficult to compare scans from different institutions that don’t use identical protocols.

Preprocessing and segmentation variability

After an image is acquired, it undergoes several preprocessing steps before features are extracted. Bias field correction adjusts for signal intensity variations across the image, while normalization scales pixel values into a standard range. The methods used for these steps can influence the final feature measurements.

Perhaps the largest source of variability is segmentation—the process of outlining the precise boundary of a lesion. Whether done manually by a radiologist or automatically with an AI tool, small differences in the defined border can lead to large changes in calculated features, especially for shape and texture. This highlights the need for both standardized preprocessing pipelines and highly consistent segmentation tools.

Evaluating Radiomic Feature Reproducibility

Before a radiomic feature can be trusted for clinical use, it must be rigorously tested. Researchers use several methods to measure how stable and reliable these features are across different conditions.

Test–retest studies and phantom experiments

One common approach is a test–retest study. In this design, a patient is scanned multiple times on the same scanner (to test repeatability) or on different scanners (to test reproducibility). The features are calculated for each scan, and the results are compared to see how much they vary.

Another valuable tool is a phantom. Phantoms are specially designed objects with known physical properties that can be imaged to simulate human tissue. Because a phantom’s structure doesn’t change, any variation in radiomic features can be attributed directly to the imaging process. This allows for controlled experiments to isolate the effects of different scanners, protocols, and vendors.

Metrics for reproducibility assessment

To quantify stability, researchers use specific statistical metrics. Three of the most common are:

- Intraclass Correlation Coefficient (ICC): This is the most widely used metric for reproducibility. It measures the proportion of total variance that is due to true differences between subjects, rather than measurement error. An ICC value close to 1.0 indicates excellent stability.

- Coefficient of Variation (CV): The CV measures the relative variability of a feature. It is calculated as the standard deviation divided by the mean. A lower CV indicates higher stability.

- Concordance Correlation Coefficient (CCC): The CCC evaluates the agreement between two sets of measurements, assessing how far the data deviates from a perfect 45-degree line of concordance. It is particularly useful for comparing features across different methods.

Feature robustness across imaging conditions

Not all radiomic features are created equal. Studies have consistently shown that some categories of features are naturally more robust than others.

- Shape and first-order features tend to be the most stable. Shape features describe the geometry of the lesion (e.g., volume, sphericity), while first-order features are based on the distribution of pixel intensities (e.g., mean, median, skewness).

- Texture-based features are often more sensitive to changes in acquisition parameters and scanner hardware. These features quantify the spatial patterns of pixels and can be heavily influenced by image resolution, noise levels, and reconstruction algorithms.

Understanding which features are inherently more stable helps researchers select the most reliable candidates for building clinical AI models.

Harmonization Techniques Across Scanners

Since it’s not always possible to use identical scanners and protocols everywhere, researchers have developed “harmonization” techniques. These are post-processing methods designed to remove technical variability from images or features, making them more comparable across different sites.

Intensity normalization and rescaling methods

The most basic form of harmonization involves standardizing the pixel intensity values. Different scanners may produce images with vastly different brightness and contrast ranges. Normalization techniques adjust these values to a common scale.

- Histogram Matching: This method modifies the intensity histogram of one image to match the histogram of a reference image.

- Z-score Normalization: This technique rescales intensities by subtracting the mean and dividing by the standard deviation, giving the image a mean of zero and a standard deviation of one.

- Nyul Standardization: This is a more advanced histogram-based method that learns a standard intensity mapping from a training set and applies it to new images.

Statistical harmonization — ComBat and beyond

For removing more complex, multi-variable differences related to specific scanners or sites, statistical methods are used. The most popular of these is ComBat. Originally developed for genomics, ComBat is an algorithm that adjusts data to remove known “batch effects”—in this case, the “batch” is the scanner or institution. It effectively isolates and removes variability tied to the acquisition site while preserving the underlying biological differences between patients.

Deep learning–based harmonization

More recently, AI itself has been used to solve the harmonization problem. Deep learning models can be trained to learn the complex, non-linear transformations needed to make an image from one scanner look as if it were acquired on another. These AI-powered approaches can automatically align either the images themselves or the radiomic feature distributions across different centers, offering a powerful and flexible way to achieve harmonization.

Multi-Center and Cross-Vendor Challenges

Achieving radiomic feature reproducibility in the real world requires collaboration and standardization on a large scale. Individual efforts are not enough; the entire medical imaging community must work together.

The importance of standardized imaging protocols

To minimize variability at the source, leading organizations have developed guidelines for standardized MRI acquisition. The PI-RADS (Prostate Imaging Reporting and Data System) provides detailed recommendations for prostate MRI protocols. Similarly, the Quantitative Imaging Biomarkers Alliance (QIBA) works to create and promote consensus-based standards to achieve reproducible quantitative results from medical imaging. Adherence to these protocols is a critical first step toward harmonization.

Data sharing and benchmarking efforts

Large, diverse datasets are essential for developing and validating robust AI models. Initiatives like The Cancer Imaging Archive (TCIA) provide a public repository of medical images from multiple cancer types, including prostate cancer. These multi-institutional datasets allow researchers to test the generalizability of their models and benchmark the performance of different harmonization techniques across a wide range of scanners and sites.

Regulatory implications for reproducibility

Reproducibility isn’t just a scientific goal; it’s a regulatory requirement. For an AI tool to receive FDA clearance, its manufacturer must prove that the device is both safe and effective. This includes demonstrating that its performance is consistent and reliable when used in different clinical settings on images from different scanners. A failure to show robust reproducibility can be a major roadblock to getting an AI product to market.

Best Practices for Achieving Reliable Radiomic Features

Building a reproducible radiomics pipeline requires a commitment to standardization at every stage, from image acquisition to feature extraction and ongoing quality control.

Acquisition standardization

The most effective way to ensure consistency is to standardize the imaging process itself. Whenever possible, imaging centers should adopt harmonized protocols, such as those recommended by PI-RADS. This includes using consistent field strengths, sequences, and key acquisition parameters across all scanners involved in a study or clinical workflow.

Preprocessing standardization

To ensure that feature extraction is consistent, all images should be processed using the same pipeline. The Image Biomarker Standardization Initiative (IBSI) provides comprehensive guidelines and reference values for radiomic features. Aligning preprocessing steps and feature calculation algorithms with IBSI standards helps ensure that results are comparable and reproducible across different software platforms.

Continuous validation and monitoring

Reproducibility is not a one-time check. As imaging centers acquire new scanners or update software, it’s essential to perform ongoing validation. This involves regularly assessing feature stability to ensure that hardware or software changes have not introduced new sources of variability. Continuous monitoring helps maintain the long-term reliability of any clinical AI tool that depends on radiomic features.

Future Directions in Radiomic Harmonization

The field of radiomic harmonization is rapidly evolving, with new AI-driven techniques promising to make the process more automated, scalable, and effective.

AI-driven harmonization pipelines

The future lies in creating fully automated systems that can take in data from any scanner and output harmonized, analysis-ready images or features. These AI-driven pipelines could learn to identify and correct for vendor-specific and protocol-specific artifacts in real time, creating a “universal” representation of the data that is independent of its source.

Federated learning for multi-site feature standardization

One of the biggest challenges in developing robust AI is accessing large, diverse datasets while protecting patient privacy. Federated learning offers a solution. In this approach, an AI model is trained across multiple hospitals without the patient data ever leaving its original location. The model “travels” to each site, learns from the local data, and a central server aggregates these learnings. This allows for the development of harmonized, generalizable models on a massive scale.

Toward universal radiomics standards

Ultimately, the goal is to establish universal standards for radiomics, much like the standards that exist for laboratory medicine. Global initiatives are working to create consensus on feature definitions, standardized acquisition protocols, and universal benchmarks for validation. Achieving this will be a major step toward making radiomics a routine and reliable part of cancer care worldwide.

Conclusion

Reproducibility, repeatability, and harmonization are the cornerstones of reliable radiomics. Without them, even the most sophisticated AI models are built on a foundation of sand, risking poor performance and a lack of trust from clinicians. For AI-powered diagnostics to fulfill their promise in prostate cancer care, we must ensure that the data they analyze is stable, consistent, and comparable. Through the combined efforts of standardized imaging protocols, advanced harmonization algorithms, and new AI-based correction methods, the field is moving confidently from research into a clinical reality where every patient can benefit from robust, reproducible imaging biomarkers.

Pioneering Cancer Detection with AI and MRI (and CT)

At Bot Image™ AI, we’re on a mission to revolutionize medical imaging through cutting-edge artificial intelligence technology.

Contact Us