Blog

Challenges in Prostate MRI AI: Small Lesions, Low contrast, Motion, and Heterogeneity

Despite major advances in artificial intelligence for prostate MRI, several real-world challenges remain. From subtle, low-contrast lesions and patient motion to the sheer biological variability of tumors, these factors test even the most sophisticated models. A perfect algorithm on paper can struggle when faced with the messy reality of clinical imaging. This article breaks down why these issues persist and explores how researchers and clinicians are working together to overcome them.

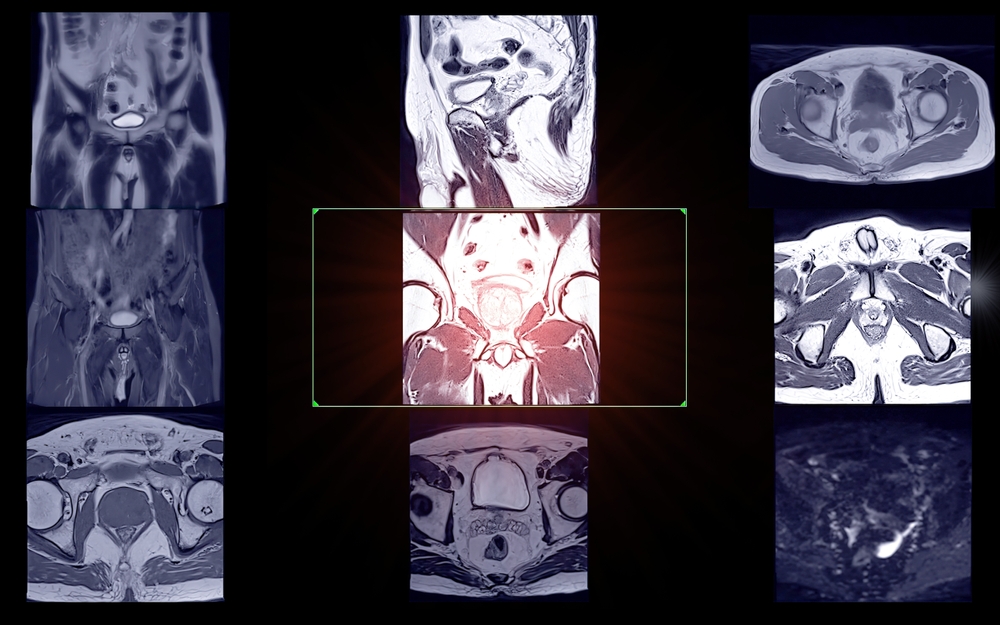

Understanding the Core Challenges in Prostate MRI AI

Before an AI can accurately classify a lesion, it must first contend with the inherent difficulties of prostate imaging. The prostate gland’s location and the nature of MRI technology create a complex environment where pinpointing cancerous tissue is a significant challenge for both human experts and algorithms.

Why prostate MRI remains a difficult imaging problem

The prostate is a small organ located deep within the pelvis, surrounded by other structures like the bladder and rectum. This anatomical positioning makes it susceptible to motion. Natural bodily functions like breathing, digestion, and bladder filling can cause the prostate to shift during a scan, leading to motion artifacts that blur images and obscure details. Furthermore, the signal differences between cancerous tissue and healthy tissue—or even benign conditions like prostatitis—can be incredibly subtle, making interpretation difficult.

The importance of addressing imaging variability

A major hurdle for any AI model is “generalizability,” or its ability to perform well on data it has never seen before. In medical imaging, this is complicated by variability. Different hospitals use MRI scanners from different manufacturers (e.g., Siemens, GE, Philips), each with unique hardware and software. Acquisition protocols—the specific settings used for a scan—can also vary widely. This means an AI trained on data from one hospital may struggle when deployed at another. Addressing this variability is crucial for creating robust, reliable AI tools that work everywhere.

Detecting and Classifying Small Lesions

One of the primary goals of prostate MRI is to detect cancer at its earliest, most treatable stage. This often means finding very small lesions, a task that pushes the boundaries of imaging technology and AI capabilities.

Why small lesion detection challenges AI models

A small cancerous lesion might only occupy a few voxels (the 3D equivalent of pixels) in an MRI scan. This tiny footprint makes it incredibly difficult for an AI model to distinguish it from random imaging “noise” or normal anatomical texture. The model has very little data to analyze, increasing the risk of both missing the lesion (a false negative) or flagging a benign area by mistake (a false positive).

Clinical importance of early and micro-lesion detection

Identifying these small lesions is clinically vital. For patients undergoing initial diagnosis, finding cancer early can lead to better outcomes. For men on active surveillance, detecting a new or growing micro-lesion can be the trigger for recommending treatment. The ability to reliably spot these small but significant findings is a key measure of an AI’s clinical utility.

Improving small-lesion visibility through model design

Researchers are developing advanced AI architectures to tackle this problem. Techniques include:

- Super-resolution: AI models that learn to increase the resolution of an image, effectively making small lesions appear larger and more distinct.

- Attention-based CNNs: Convolutional Neural Networks (CNNs) that are designed to “pay attention” to the most informative regions of an image, helping them focus on subtle lesion characteristics.

- Multi-scale architectures: Models that analyze the image at different magnification levels simultaneously, allowing them to spot both large, obvious tumors and tiny, emerging ones.

These approaches help AI see beyond the obvious and extract meaningful patterns from limited data.

Low-Contrast and Ambiguous Lesions in Prostate MRI

Not all tumors are created equal. Some blend almost seamlessly into the surrounding tissue, presenting a low-contrast challenge that can fool both the human eye and standard AI algorithms.

The limits of conventional image contrast

On a standard MRI, the difference in signal intensity (brightness) between a cancerous lesion and healthy prostatic tissue can be minimal. Benign conditions like benign prostatic hyperplasia (BPH) or inflammation from prostatitis can also create ambiguous signals that mimic cancer. When the contrast is low, classification becomes a judgment call with a high degree of uncertainty.

Role of radiomics and deep features in overcoming low contrast

This is where advanced AI techniques shine. Radiomics involves extracting hundreds of quantitative features from an image that describe a lesion’s shape, texture, and intensity patterns. These mathematical features can capture subtle variations that are invisible to the naked eye. Similarly, deep learning models learn their own “deep features,” complex patterns derived from analyzing thousands of examples. These features help the AI see beyond simple contrast and identify the underlying signature of cancerous tissue.

Sequence selection and protocol optimization

No single MRI sequence tells the whole story. A comprehensive prostate MRI exam combines multiple types of images to improve tumor conspicuity. T2-weighted (T2W) images provide excellent anatomical detail, while Diffusion-Weighted Imaging (DWI) and the corresponding Apparent Diffusion Coefficient (ADC) map reveal information about water molecule movement, which is restricted in dense cancerous tissue. By analyzing these sequences together, AI can build a more complete and confident picture, overcoming the limitations of any single view.

Motion Artifacts and Their Impact on Model Accuracy

An MRI scan can take 30-45 minutes, and during that time, the patient must remain perfectly still. Even slight movements can introduce artifacts that degrade image quality and compromise the accuracy of any subsequent analysis.

How patient motion distorts prostate MRI data

Motion during a scan can come from several sources. The patient might breathe, cough, or simply shift position. Internal, involuntary movements, such as gas in the rectum or the bladder filling up, can also cause the prostate to move. These movements result in blurring, ghosting, or streaking artifacts on the final images, making it difficult to delineate tissue boundaries and assess internal structures.

Motion-induced feature instability

These artifacts are particularly damaging for AI models that rely on quantitative features. A motion-blurred lesion will have different texture and boundary characteristics than a sharply defined one. This “feature instability” means that the data fed into the AI is unreliable. The model may fail to recognize a lesion it would otherwise have detected, or it might misinterpret an artifact as a sign of disease.

Motion correction and compensation strategies

The medical imaging community is actively developing solutions to mitigate motion. These strategies include:

- Motion-robust acquisition: Faster MRI sequences that can capture images before significant motion occurs.

- Retrospective correction: AI algorithms that can identify and computationally remove motion artifacts from an already-acquired image.

- Prospective correction: Advanced systems that track patient motion in real-time and adjust the scan parameters on the fly to compensate.

These innovations are critical for ensuring that AI models receive clean, high-quality data.

Tumor and Tissue Heterogeneity — The Ultimate AI Challenge

Perhaps the most complex challenge in prostate MRI is heterogeneity—the immense biological variability found in prostate cancer. Tumors are not uniform masses; they are complex, diverse ecosystems.

Biological variability in prostate cancer

Prostate tumors can vary dramatically in their aggressiveness, shape, cellular density, and internal structure. A single patient can even have multiple distinct tumors, each with a different Gleason score and prognosis. Furthermore, a single large tumor can contain a mixture of high-grade and low-grade cancer, a phenomenon known as intra-tumoral heterogeneity.

Imaging signatures of heterogeneity

This biological complexity is reflected in the MRI. A heterogeneous tumor may show a chaotic mix of signal intensities and textures. AI techniques, particularly radiomics, can be used to quantify this variation. By measuring features related to texture and intensity distribution, these models can generate an “imaging signature” that reflects the tumor’s underlying biology.

Why heterogeneity complicates lesion classification

Heterogeneity poses a major problem for AI models. An algorithm trained primarily on uniform, well-defined tumors may become confused when presented with a lesion containing mixed signals. It might average out the features and assign an intermediate risk score, potentially underestimating the danger of a small but aggressive component within the tumor. Accounting for this variability requires extremely large and diverse training datasets.

Combining Technical and Clinical Approaches to Overcome Limitations

Solving these challenges isn’t just a technical problem; it requires a deep partnership between engineers and the clinicians who will use the technology.

Cross-disciplinary collaboration between radiologists and engineers

The best AI models are built with constant feedback from clinical experts. Radiologists can help engineers understand which imaging features are most important and provide high-quality annotations to train the models. In turn, engineers can show radiologists how the AI “sees” an image, leading to a co-learning process that improves both human and machine performance.

Role of standardization and harmonization initiatives

To combat data variability, organizations like the Quantitative Imaging Biomarkers Alliance (QIBA) and the Image Biomarker Standardisation Initiative (IBSI) are working to create global standards. These initiatives provide guidelines for how to acquire MRI scans and how to calculate radiomic features, ensuring that data is more consistent across different hospitals and scanners.

Incorporating clinical priors for contextual AI

An MRI scan is just one piece of the puzzle. AI models can be made more robust by giving them access to other clinical data, or “priors.” Information like a patient’s PSA level, age, and prior biopsy results can provide valuable context that helps the AI resolve ambiguous imaging findings. This multi-modal approach more closely mimics how a human physician makes a diagnosis.

The Road Ahead — Research and Future Solutions

The field of AI is evolving rapidly, and new techniques are constantly emerging to address these long-standing challenges.

Synthetic data and generative modeling

One way to overcome the lack of diverse training data is to create it artificially. Generative Adversarial Networks (GANs) and other generative models can learn the characteristics of prostate MRI and then generate new, synthetic images of rare lesion types or from underrepresented patient populations, helping to make AI models more robust.

Self-supervised and few-shot learning for scarce examples

New AI training methods are reducing the need for massive labeled datasets. Self-supervised learning allows a model to learn from vast amounts of unlabeled data, while few-shot learning enables it to accurately classify new types of lesions after seeing only a handful of examples. These techniques are ideal for tackling the “small lesion” problem.

Standardizing datasets for global reproducibility

Ultimately, the best way to build a truly generalizable AI is to train it on globally diverse data. There is a growing call in the research community for large, multi-institutional, and publicly available datasets that capture the full range of scanner types, patient populations, and disease variations found in the real world.

Conclusion

The path to perfecting AI for prostate MRI is paved with significant challenges. Small lesion size, low image contrast, patient motion, and profound biological heterogeneity remain key barriers to achieving flawless performance. These are not simple problems with easy fixes.

However, the field is making incredible progress on all fronts. Through improved imaging protocols, industry-wide data harmonization efforts, and the development of more advanced and adaptable AI architectures, we are systematically closing these gaps. Each challenge overcome moves us closer to a future of consistent, trustworthy, and clinically meaningful AI-assisted prostate cancer detection—empowering clinicians with the confidence to make better decisions for every patient.

Pioneering Cancer Detection with AI and MRI (and CT)

At Bot Image™ AI, we’re on a mission to revolutionize medical imaging through cutting-edge artificial intelligence technology.

Contact Us